TL;DR

About

- [*]Freelance System Architect, Software Engineer, Full-stack Developer focused on scalable, distributed, event-driven systems.

- [*]Graduated from the Technical University of Munich (Electrical Engineering/Data Processing).

- [*]35+ years of engineering expertise.

- [*]

Skills

Core Technology Stack

- [*]Primary Runtime — Node.js, C/C++, Java

- [*]Persistence — TimescaleDB/PostgreSQL, GCP Datastore, LMDB

- [*]Communication — WebSockets (Socket.IO), WebSphere MQ, SNA LU0, RS232

- [*]Infrastructure — Docker, Docker Compose, Linux/Raspberry Pi OS, PM2

- [*]Methodologies — TDD (Jasmine, Karma), Mono-repo architecture, Functional Programming

Programming Languages

JavaScript C/C++ JavaBackend & Runtime

Node.js Java EE ElectronWeb & Mobile

HTML5/CSS3 Express CordovaEmbedded

Raspberry Pi Raspberry Pi OSOperating Systems

Linux macOS Windows AndroidData Systems

PostgreSQL TimescaleDB MySQLNetworking & Protocols

REST/HTTP(S) TCP/IP Socket.IO SNA LU0 RS232Services

- [*]Software Architecture & System Modernization — Scalable, Distributed, Secure, Reliable

- [*]Full-Stack & Application Development — Cross-Platform, Desktop & Mobile, Hybrid & Native

- [*]Embedded & Low-Level Systems — Firmware, Hardware-Near, IoT Integration

- [*]Technical Leadership & Advisory — Strategic Consulting, Team Enablement, Project Rescue

- [*]Experience & Industry Background — Electrical Engineering, Medical Engineering, Automotive, Telecommunications, Media, Finance/FinTech

Contact

Services & Expertise

Bridging the gap between hardware, software, and business goals with 35+ years of engineering expertise.

Software Architecture & System Modernization

- [*]Architectural Design: Blueprinting scalable, distributed, and event-driven systems that grow with your business.

- [*]Modernization: Analyzing legacy monoliths and executing strategic refactoring into modular or service-based architectures.

- [*]Integration: Expert API design and complex system integration.

- [*]Resilience: Ensuring non-functional requirements — security, high availability, and deterministic performance — are built in, not bolted on.

Full-Stack & Application Development

- [*]Web & Cloud: Full-stack development using modern frameworks and standards.

- [*]Cross-Platform Solutions: Building desktop and mobile applications — Hybrid, Native — that function seamlessly across environments.

- [*]Frontend Engineering: Implementing component-based architectures with a focus on performance, accessibility, and strict UI/UX fidelity.

Embedded & Low-Level Systems

- [*]Firmware Development: Engineering hardware-near software with strict timing and resource constraints.

- [*]IoT & Integration: Bridging sensors, controllers, and field buses with higher-level cloud and application layers.

- [*]Reliability: Delivering stable solutions for critical environments (Automotive, Medical, Industrial).

Technical Leadership & Advisory

- [*]Strategic Consulting: Architecture reviews, code audits, and technical due diligence for investors or stakeholders.

- [*]Team Enablement: Mentoring in-house teams, introducing best practices, and bridging the gap between management and engineering.

- [*]Project Rescue: Stabilization and turnaround of at-risk software projects.

Experience & Industry Background

- [*]Core Sectors: Electrical Engineering, Medical Engineering, Automotive, Telecommunications, Media, Finance/FinTech.

- [*]Contexts: IT & Enterprise Software, Public Sector, Retail Systems.

Skills

Core Technology Stack

- [*]Primary Runtime — Node.js, C/C++, Java

- [*]Persistence — TimescaleDB/PostgreSQL, GCP Datastore, LMDB

- [*]Communication — WebSockets (Socket.IO), WebSphere MQ, SNA LU0, RS232

- [*]Infrastructure — Docker, Docker Compose, Linux/Raspberry Pi OS, PM2

- [*]Methodologies — TDD (Jasmine, Karma), Mono-repo architecture, Functional Programming

Technical Expertise

Functional Programming & Data Orchestration

- [*]Fluent Interface Design: Expert in architecting "chainable" APIs and functional pipelines that prioritize readability and maintainable data flows.

- [*]Dynamic Data Structures: Implementation of complex data patterns, including self-referencing JSON engines and recursive schema management (Hookney).

- [*]Reactive & Event-Driven Systems: Proficient in real-time bidirectional communication and state-managed event cycles.

High-Performance UI & DOM Engineering

- [*]Programmatic UI Construction: Specialist in lightweight, framework-agnostic HTML rendering and direct DOM abstraction (Hummersmyth).

- [*]Modular Component Design: Building reusable, decoupled UI libraries for both research dashboards and enterprise administrative tools.

Distributed Systems & System Integrity

- [*]Edge Computing & IoT: Architecting resilient gateways that manage physical hardware states, power cycles, and local-first data persistence.

- [*]Legacy-to-Modern Migration: Expert in porting mission-critical C++/SNA stacks to modern Java/WebSphere MQ architectures.

- [*]Cryptographic & Secure Systems: Deep experience in HSM integration, EPP hardware drivers, and secure banking protocol implementation.

Skill Level Color Code

- [*]Core MasteryThe foundations of my technical career and areas of deepest architectural influence.

- [*]Deep ExpertiseTechnologies and methodologies I have deployed across numerous high-stakes projects.

- [*]Proficient & ProvenSolid technical command with a track record of successful implementation.

- [*]Technically FluentModern tools and frameworks I use confidently in professional environments.

- [*]Working KnowledgeAdditional technologies I have worked with or integrated into larger systems.

Detailed Skills

Programming Languages

Operating Systems

Backend

Database Systems

Web Technologies

Mobile Development

Infrastructure

Frameworks / Libraries / APIs

Server-side / Backend

Client-side / GUI

JavaScript

CSS

Hybrid

Cloud Development

Embedded System Development

Web Services

Networking

Protocol Suite / Application layer (OSI 5,6,7)

Protocol Suite / Transport layer (OSI 4)

Protocol Suite / Internet layer (OSI 3)

Protocol Suite / Link layer (OSI 2)

Digital Equipment Corporation

IBM

RPC

Serial

Software

Crypto

Financial Services / Banking / Special Hardware

Software

Hardware

Work (Excerpts)

Over the past three decades, I have partnered with industry leaders, innovative mid-sized companies and startups to deliver high-performance software solutions. My project history spans the entire lifecycle — from initial architectural discovery to long-term system maintenance.

My career has been defined by the cross-pollination of technical disciplines. I believe that the most robust solutions are found at the intersection of industries.

My work in Medical Engineering taught me the uncompromising necessity of safety and deterministic reliability. My experience in FinTech honed my approach to high-security architectures and complex audit trails. In Automotive and Industrial projects, I mastered the art of hardware-software synergy.

I bring this multi-faceted perspective to every engagement. For my clients, this means I don't just solve the problem at hand — I bring 'battle-tested' methodologies from across the technological landscape to ensure their system is resilient, secure, and architecturally sound.

Below is a curated overview of representative projects where I served as a Lead Architect, Senior Software Engineer, Full-stack Developer, Project Author, or Technical Consultant.

Overview

FaW Data Collector & Research Framework / Fields at Work / 2023 - Today

This project reflects a high level of software engineering maturity. It goes beyond application development by addressing framework-level design concerns and building data infrastructure suitable for research-oriented use.

By building a mono-repository of specialized libraries (frameworks), not only a data problem was solved; also a reusable platform for large-scale scientific research was created.

The FaW Data Collector is a comprehensive system designed to ingest, process, and persist measurement data from a variety of sources, primarily mobile devices and environmental sensors. It serves as the backend infrastructure for several research initiatives, ensuring that high-volume time-series data is reliably collected and stored for analysis.

Currently, the system powers data collection for (among others)

- [*]ETAIN (Exposure To electromAgnetic fIelds and plaNetary health): Handling measurement data for several Work Packages.

- [*]EMEROS (University of Geneva): A dedicated instance for specific research data schemas.

- [*]ExpoMConnected: Collecting data from ExpoM RF measurement devices.

Role

- [*]Chief Software Architect

- [*]Framework Engineer

- [*]Senior Full-stack Developer

Sector

- [*]Scientific Research

- [*]Environmental Monitoring

- [*]Time-Series, Big Data

The Challenge: Harmonizing Heterogeneous Research Data

Scientific research projects like ETAIN and collaborations with the University of Geneva generate vast amounts of disparate data — from mobile phones, mobile environmental sensors to complex physiological measurements. The challenge was to create a unified, scalable system capable of ingesting high-frequency, time-series data from multiple sources while ensuring data integrity, high-speed retrieval, and architectural flexibility for varying research schemas.

The Solution: A Specialized Framework Architecture

Instead of building isolated applications, a proprietary mono-repo ecosystem of Node.js libraries was engineered. This framework provides a standardized foundation for rapid deployment across different research work packages.

- [*]Custom Framework Engineering:

- [*]Bethnel & Greedwich: Developed a "configuration-first" backend foundation and a data-orchestration layer that decouples data transport from payload content, allowing for extreme flexibility in data schemas.

- [*]Pypelyne: Implemented a modular data transformation engine, enabling complex ETL (Extract, Transform, Load) processes to be defined as discrete, testable steps.

- [*]Brydge: Built a bidirectional communication layer leveraging

socket.iofor real-time telemetry and API-driven resource fetching.

- [*]

- [*]Time-Series Optimization: Optimized the persistence layer using TimescaleDB/PostgreSQL. The schema was designed to handle the unique indexing and partitioning requirements of high-volume environmental measurement data, ensuring high performance for long-range analytical queries.

- [*]Modular Collector Implementation: Leveraging the core libraries, specialized collectors were deployed as Dockerized microservices. This allows for isolated scaling and environment-specific configurations (Dev/Test/Prod).

- [*]Analytical Tooling: Developed a CLI-based analysis suite that allows researchers to evaluate, convert, and extract insights directly from the TimescaleDB/PostgreSQL backend, bridging the gap between raw storage and scientific evaluation.

Impact: Empowering Global Research

- [*]Standardization: Reduced the time-to-market for new research collectors by reusing the core framework and library suite.

- [*]Scientific Reliability: Provided a robust, persistent data backbone for research projects, ensuring data safety for multi-year environmental studies.

- [*]Scalability: The containerized architecture allowed for the seamless addition of new data sources (like

ExpoMConnecteddevices) without disrupting existing research pipelines.

Technical Overview

The project is structured as a monorepo containing a suite of custom, reusable core libraries (frameworks) and the specific application implementations that utilize them. The stack is primarily built on Node.js and leverages TimescaleDB/PostgreSQL for high-performance time-series data storage.

Architecture & Core Libraries (The "London Stack")

The system uses a set of internal libraries to enforce a modular and configuration-driven architecture:

- [*]Bethnel: The fundamental Backend Framework. It provides a configuration-first approach to building Node.js servers based on

Express, handling database abstractions (supporting GCP Datastore, PostgreSQL, LMDB, etc.), and server lifecycle management. - [*]Greedwich: The Data Processing Platform layer. Built on top of

Bethnel, it orchestrates the specific flows of collecting, processing, and storing data. It distinguishes between the raw "data" envelope and the actual "payload". - [*]Pypelyne: A Pipeline library used to define and execute sequential data transformation and processing steps.

- [*]Brydge: A networking library handling API calls and real-time, bidirectional communication via

socket.io. - [*]Fuelham: The JavaScript Groundwork library, providing essential low-level utilities and helpers.

- [*]Lembeth: A UI Component library for building dashboard interfaces in both frontend and backend contexts.

- [*]Poplayr: A Presentation Framework for visualization tasks.

Applications (Collectors)

The repository contains several distinct Node.js applications that implement the Bethnel/Greedwich stack for specific use cases:

- [*]ETAINDataCollectorWP(x): Specialized collectors configured for the specific data schemas and reporting intervals of the ETAIN project.

- [*]UniGeDataCollector: Tailored for the University of Geneva's study, capable of handling specific sensor and signal data types.

- [*]ExpoMConnected: A gateway/collector for ExpoM RF devices, handling band settings and measurement values.

Data Storage & Analysis

- [*]Database: TimescaleDB/PostgreSQL is the standard for storage, chosen for its efficiency with time-series data (partitioning, compression, and aggregation).

- [*]DataAnalysis: A dedicated CLI tool included in the repo for running offline evaluation, conversion, and gap analysis on the collected datasets.

Infrastructure & Technology Stack

- [*]Runtime: Node.js

- [*]Database: TimescaleDB (PostgreSQL extension for time-series).

- [*]Deployment: Docker & Docker Compose.

- [*]Communication: HTTP/HTTPS and WebSockets (

socket.io). - [*]Build System: Grunt.

- [*]Testing:

Jasmine,Karma,NYC (Istanbul)for coverage.

Runtime environment

- [*]Linux

- [*]CentOS

- [*]macOS

- [*]Node.js

Development stack

- [*]JavaScript

- [*]Git

ExpoM-RF4 IoT Gateway / Fields at Work / 2023 - 2024

Role

- [*]Lead Architect

- [*]IoT Systems Engineer

Sector

- [*]Industrial IoT

- [*]Telemetry

- [*]Remote Sensing

The Challenge: Achieving Field Autonomy

The objective was to deploy a Raspberry Pi-based gateway capable of operating autonomously in remote locations to collect RF measurement data. The hardware presented a unique constraint: it could not charge its battery while in "Data Mode." Manual intervention was not an option, requiring a software-defined solution to manage the physical hardware state, power cycles, and data persistence over unstable network links.

The Solution: Hardware-Software Orchestration

A resilient Node.js application that treats the physical hardware environment as a state-managed entity was engineered.

- [*]State-Driven Power Management: Developed a custom orchestration loop that toggles the device between "Charging" and "Data" modes. This was achieved by interfacing directly with the host OS's GPIO subsystem (using

libgpiod) to control a USB-power-switching bridge, ensuring the device remains powered indefinitely in the field. - [*]Protocol Engineering: Implemented a robust serial communication stack using

serialport. Authored the command/response logic to handle the device's proprietary binary protocol, including memory address management and multi-packet data extraction. - [*]Edge Data Transformation: Built a sophisticated processing pipeline. Raw datasets are extracted, combined with GPS telemetry, and passed through a calibration engine (

ExpoMRF4Calibrator) to normalize the data before it leaves the edge. - [*]Fail-Safe Data Persistence: To combat intermittent field connectivity, a "Local-First" storage strategy was implemented. Data is buffered as validated JSON files in a local directory. A specialized

MeasurementDataSenderhandles the asynchronous upload, employing a strict Transaction-Safe protocol.

Technical Stack & Reliability

- [*]Core Logic: Node.js (Asynchronous I/O for concurrent data processing and hardware control).

- [*]Hardware Interface: GPIO (via shell integration), Serial/UART, USB Bridge (cp210x/ftdi).

- [*]Resilience Tools:

PM2for process monitoring,winstonfor forensic logging, andhookneyfor safe filesystem operations. - [*]Environment: Headless Raspberry Pi OS, optimized for low-resource consumption and high uptime.

The Impact: Zero-Maintenance Telemetry

- [*]Uninterrupted Data Flow: The system effectively eliminated data gaps caused by battery depletion or network outages.

- [*]Reduced Operational Costs: By automating the hardware power-cycle, the need for site visits to reset or charge devices was reduced to zero.

- [*]Data Integrity: The fail-safe buffering mechanism ensured 100% data delivery, even in environments with less than 50% network availability.

Runtime environment

- [*]Raspberry Pi OS

- [*]Raspberry Pi

- [*]Node.js

Development stack

- [*]JavaScript

- [*]Git

Open-Source Developer Tooling (London Series) / thomasbillenstein.com / 2018

Role

- [*]Lead Author

- [*]Open Source Maintainer

Sector

- [*]Functional Programming

- [*]DOM Abstraction

The Challenge: Reducing Boilerplate in Complex Data Flows

In the development of large-scale JavaScript applications, I found that standard patterns for I18N, DOM manipulation, and function argument handling often became bloated and difficult to maintain. My objective was to create a suite of "tiny but powerful" libraries that provide elegant, fluent interfaces for these foundational tasks, optimized for both the browser and Node.js.

The Solution: Targeted Functional Utilities

This collection provides precise solutions to specific architectural friction points, prioritizing a "Fluent Interface" design (method chaining) and a minimal footprint.

- [*]Hookney: Self-Referencing JSON Engine

Hookney is a helper around self-referencing JSON objects for Node.js and the browser. Hookney supports reading from and writing to files. JSON files may contain comments. This makes Hookney ideal for handling configuration files.

- [*]The Problem: Standard JSON is static and cannot naturally represent data that needs to reference other parts of itself.

- [*]The Solution: A specialized helper for handling Self-Referencing JSON objects. This is particularly useful for complex configurations or data structures where values are derived from other properties within the same object.

- [*]

- [*]Hummersmyth: The Lightweight HTML Architect

Hummersmyth is a tiny and simple yet powerful HTML renderer and DOM creator for Node.js and the browser. Hummersmyth has no dependencies.

- [*]The Innovation: A high-performance HTML renderer and DOM creator. It allows for the programmatic generation of DOM structures without the overhead of a heavy framework.

- [*]Technical Edge: It bridges the gap between raw string concatenation and complex Virtual DOMs, providing a simple yet powerful API for dynamic UI generation.

- [*]

- [*]Islyngten: Streamlined I18N Orchestration

Islyngten is a tiny JavaScript library supplying I18N translation support for Node.js and the browser. It provides simple, yet powerful solutions for multiple plural forms and interpolation. Islyngten has no dependencies.

- [*]The Logic: A minimalistic library for Internationalization (I18N). Unlike heavy alternatives, it provides a "bare-metal" way to handle translations in both backend and frontend environments.

- [*]Impact: It allows for highly portable translation logic that can be injected into any part of a system without adding significant weight to the bundle.

- [*]

- [*]Pargras: The Fluent Argument Manager

Pargras is a minimalistic helper around function arguments for Node.js and the Browser. Pargras has a fluent interface and supports adding, removing and altering arguments before applying them to a function.

- [*]The Focus: A minimalistic helper designed to manipulate function arguments.

- [*]Technical Edge: It features a Fluent Interface that allows developers to add, remove, or alter arguments before they are applied to a target function. This provides a high degree of control over functional composition and "currying" patterns.

- [*]

The Impact: Micro-Library Philosophy

- [*]Universal Compatibility: Every module is designed to run natively in both Node.js and modern browsers, ensuring consistency across the entire full-stack lifecycle.

- [*]Zero-Bloat Engineering: By keeping these modules "tiny", it is ensured that they can be dropped into high-performance projects without impacting load times or memory overhead.

- [*]Developer Ergonomics: The use of fluent interfaces across the suite significantly improves code readability and reduces the cognitive load for engineers working with complex data transformations.

Runtime environment

- [*]Node.js

- [*]Browser

Development stack

- [*]JavaScript

- [*]Git

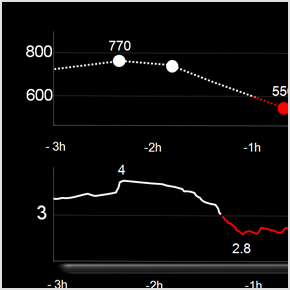

RheoLity / Luciole Medical / 2017 - Today

Role

- [*]Lead Architect

- [*]Senior Software Engineer

- [*]Full-stack Developer

Sector

- [*]Medical Engineering

- [*]Diagnostics

Task

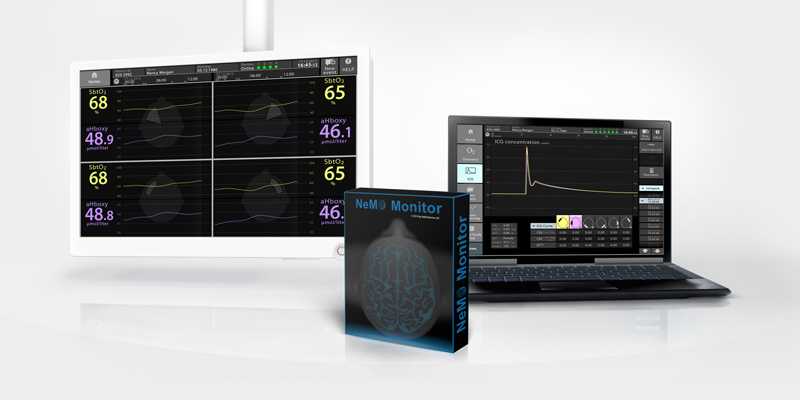

Development of RheoLity, software component of Luciole Medical's RheoLity System and successor of NemoDevices' NeMo Monitor.

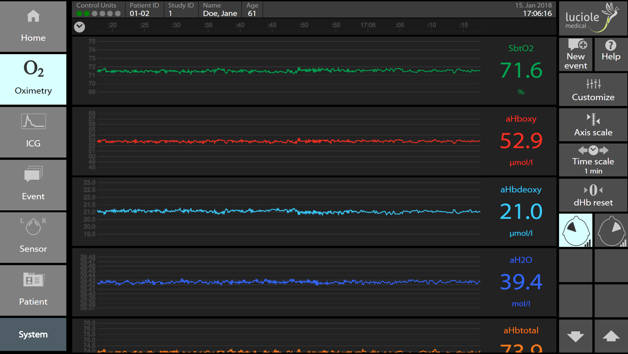

RheoLity neuromonitoring system is based on optical extinction measurements in the near infrared region. The method is based on the well-established near infrared spectroscopy (NIRS) principle. In this region of the spectrum light penetrates biological tissue. Blood, in particular its constituents oxygenated and deoxygenated hemoglobin act as absorbing constituent against the background and therefore allows for quantification of their concentrations.

RheoLity is a user-friendly software used in conjunction with RheoControl, RheoSens and RheoPatch, which displays and analyzes the measurement data. CBF and oxygen metabolism are analyzed and displayed on the Rheo Monitor. RheoLity manages patient information and the patient measurement series. The software can be run on a standard medical PC.

The Challenge

Development of a high-precision medical diagnostic tool for measuring cerebral blood flow. The project required a sophisticated interface capable of real-time data visualization while maintaining the strict safety and reliability standards of the medical sector.

The Solution

- [*]Real-Time Visualization: Developed a highly responsive frontend for continuous monitoring of cerebral blood flow (CBF) and brain oxygenation.

- [*]Signal Processing Integration: Built the bridge between low-level physiological sensor data and the high-level application layer.

- [*]Medical-Grade UI/UX: Implemented a robust user interface designed for clinical bedside use, prioritizing clarity, accuracy, and reliability.

- [*]System Stability: Ensured deterministic behavior of the monitoring software to support critical healthcare decision-making.

The Impact

- [*]Clinical Readiness: Delivered a production-ready software suite that supports invasive and non-invasive monitoring for acute brain injury patients.

- [*]Precision: Achieved high-fidelity data rendering, allowing doctors to observe minute changes in physiological markers in real time.

Implementation

In contrast to the monolithic hybrid application NeMo Monitor, RheoLioty was developed as a multiprocess, multi-tier system with independent components that can be distributed to different system platforms (Windows, Linux, macOS, ARM, Browser). The core components (measurement data acquisition, algorithms) are programmed in C++, the GUI is a browser-based HTML5/CSS3/JavaScript application and the business logic is implemented as a Node.js/JavaScript application. Communication between the individual processes takes place network-wide via Socket.IO.

Runtime environment

- [*]Windows 11/10

- [*]macOS

- [*]Linux

- [*]Medical PC

- [*]Node.js

- [*]Electron

- [*]Chromium

Development stack

- [*]JavaScript

- [*]Visual C++

- [*]Git

NeMo Monitor / NeMoDevices / 2011 - 2017

Role

- [*]Lead Architect

- [*]Senior Software Engineer

- [*]Frontend Engineer

- [*]Full-stack Developer

Sector

- [*]Medical Engineering

- [*]Neuro-Monitoring

Task

Development of NeMo Monitor, software component of NeMoDevices' NeMo System.

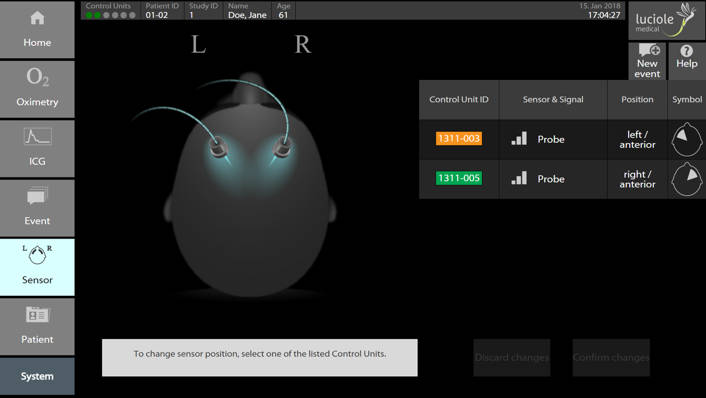

NeMo System is a solution for minimally-invasive (NeMo Probe) as well as non-invasive (NeMo Patch) multi-parameter neuromonitoring in a single system.

The interface of NeMo Monitor is designed for user-friendly, simple and intuitive operation. Connected NeMo Probes and NeMo Patches are automatically detected. The software actively guides through measurement procedures, while clearly representing trend curves as well as current measurement values. Customizable views enable the usage of NeMo Monitor in various clinical situations.

The Challenge

Architecting the monitoring software for specialized neuro-monitoring devices. The focus was on creating a multi-platform solution that could present complex neurological data in an intuitive way for specialists.

The Solution

- [*]Cross-Platform Architecture: Designed and implemented the monitoring application using Electron to ensure seamless performance across different operating systems.

- [*]Modular Component Design: Developed a component-based frontend architecture that allowed for flexible display of various neurological metrics.

- [*]Data Integrity: Implemented strict data modeling to ensure that the visualization of brain oxygenation parameters remained accurate and synchronized with the hardware.

- [*]Modernization: Leveraged modern web standards to provide a desktop-class experience for high-stakes medical monitoring.

The Impact

- [*]Workflow Efficiency: Simplified complex data streams into actionable visual insights for medical professionals.

- [*]Scalability: Created a maintainable codebase that allows for the future integration of additional sensors and neurological markers.

Implementation

NeMo Monitor is designed and implemented as a hybrid application, embedding a Chromium WebPage into a desktop application via CEF (Chromium Embedded Framework). GUI and business logic are implemented as a HTML5/CSS3/JavaScript app running inside Chromium whereas core components, such as collecting metrics from input devices, computing measurement parameter algorithms, storing measurement data on disk and transferring measurement parameters to external medical systems, are provided by a native C++ application. Both parts of the system are connected via JavaScript/C++ bridge.

Runtime environment

- [*]Windows 8/7

- [*]Medical PC

Development stack

- [*]JavaScript

- [*]Visual C++

- [*]CEF (Chromium Embedded Framework)

- [*]Chromium

- [*]Git

- [*]Subversion

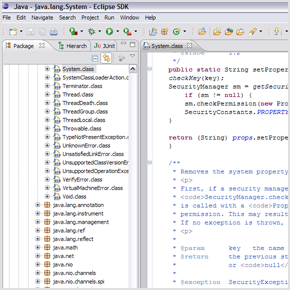

BAP - Kreditsicherheiten / Fiducia & GAD (Atruvia) / 2014 - 2021

Role

- [*]Senior Java Engineer

Sector

- [*]Finance

- [*]Banking

The Challenge

Strategic development and modernization of the "Kreditsicherheiten" (Credit Collateral) subproject — a mission-critical component of the central Bankarbeitsplatz (BAP) ecosystem. The task required navigating the complexities of the Java Based Banking Framework (JBF) to expand functionality while maintaining strict financial compliance.

Key Contributions

- [*]System Evolution: Designed and implemented new high-availability packages and modules to extend the core banking functionality.

- [*]Architectural Refinement: Performed deep-level redesign and refactoring of legacy classes to improve system performance and maintainability.

- [*]Optimization: Identified and resolved architectural bottlenecks, ensuring the JBF-based components met modern enterprise standards.

Runtime environment

- [*]Windows 7

Development stack

- [*]Java EE

- [*]Swing

- [*]Tomcat

- [*]Subversion

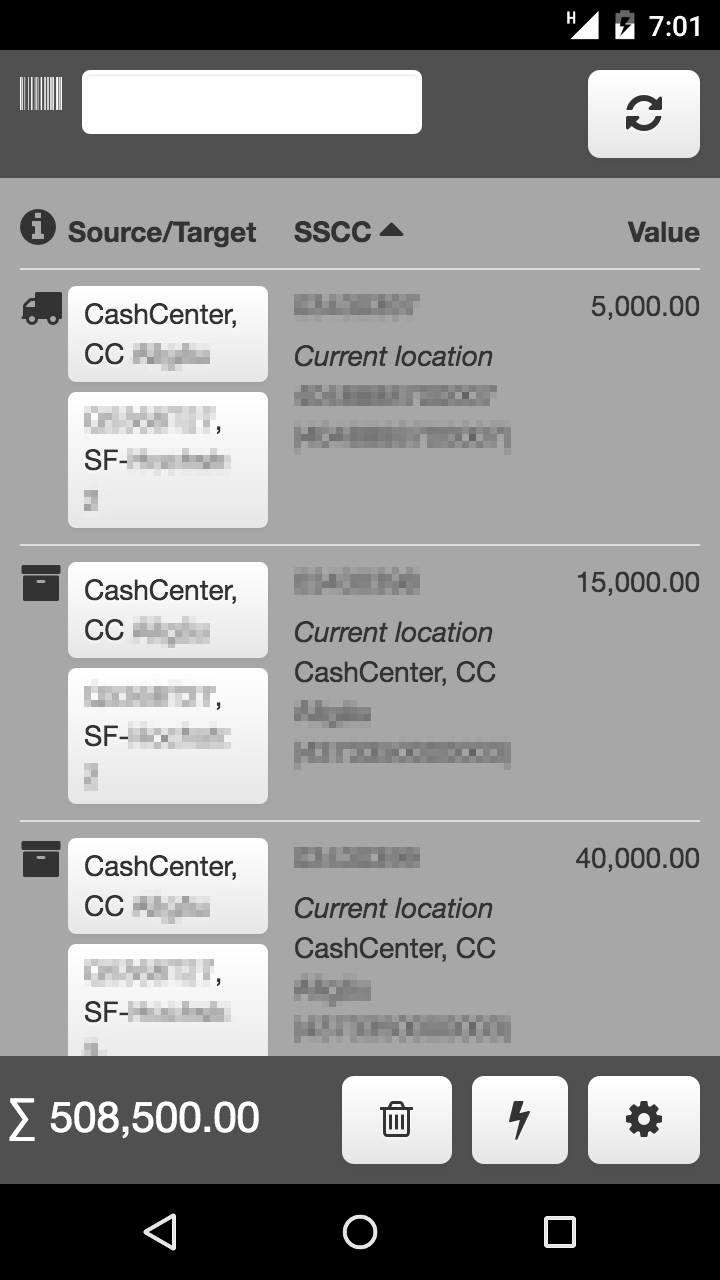

CCO-Track And Trace / planfocus / 2013 - 2014

Role

- [*]Lead Architect

- [*]Senior Full-stack Developer

- [*]Technical Consultant

Sector

- [*]FinTech

- [*]Logistics

Task

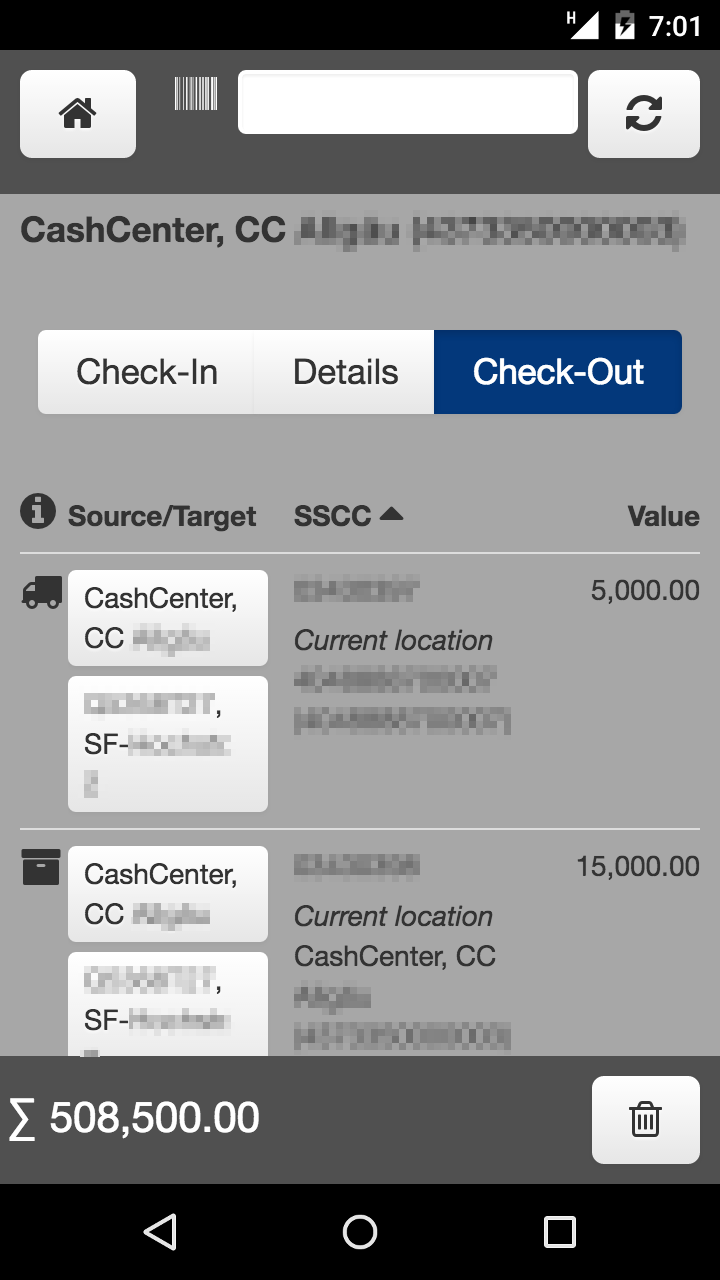

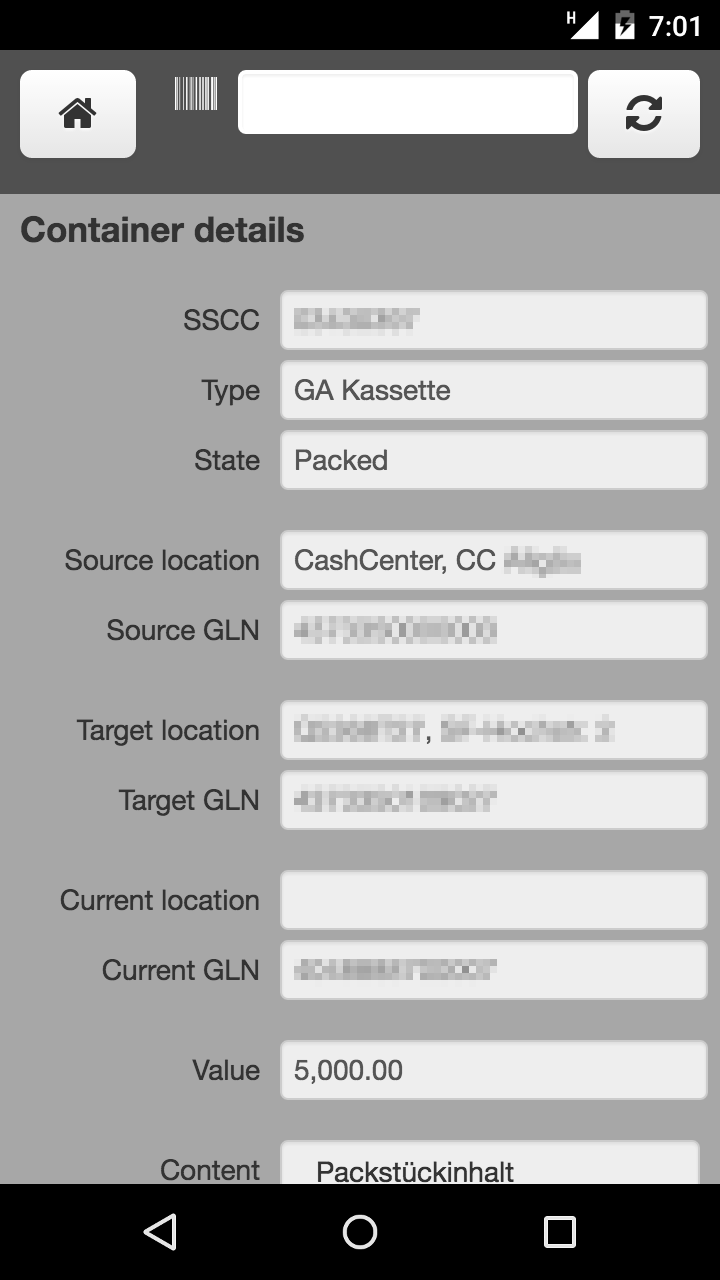

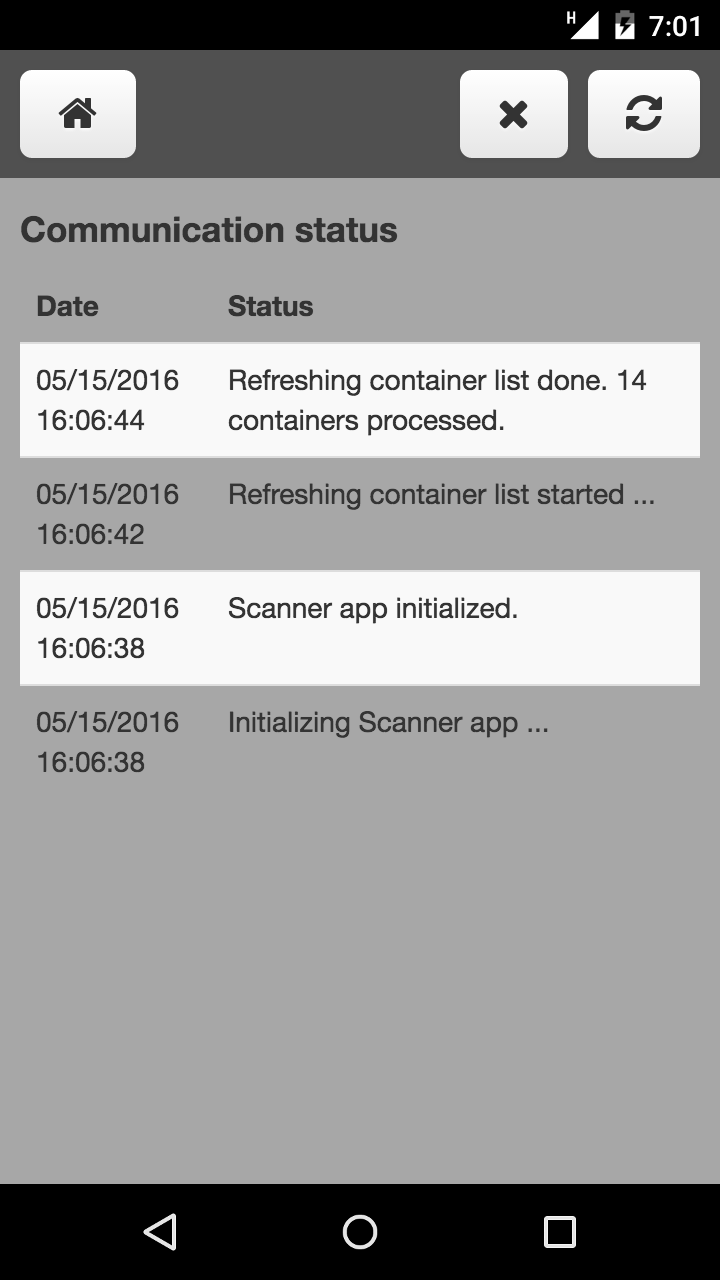

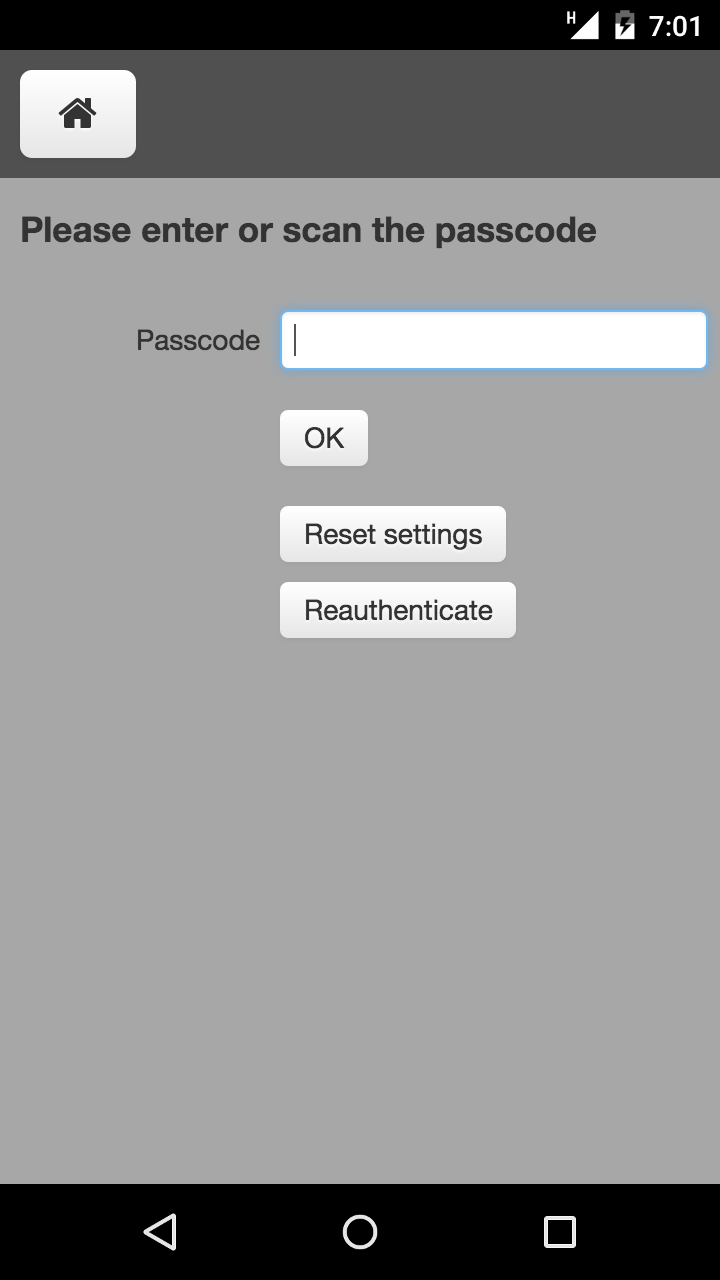

Design and Development of CCO-Track And Trace, an important solution component for process optimization within the CCO Solution Suite.

It provides for end-to-end track & trace of all cash shipments, be it ATM cassettes or other packages or shipments, together with transparent monitoring.

The Challenge

Developing a high-security tracking and tracing system for cash-in-transit (CIT) operations. The system had to handle complex logistics, high-security hardware integration, and real-time status tracking for financial assets.

The Solution

- [*]End-to-End Tracking: Developed the full-stack architecture to manage the lifecycle of assets from pickup to delivery.

- [*]Security Integration: Implemented secure communication protocols between field devices (scanners/locks) and the central management system.

- [*]Real-Time Analytics: Built a dashboard for real-time visibility into the CIT supply chain, enabling immediate intervention in case of discrepancies.

- [*]Robust Data Modeling: Designed a resilient database schema to handle the audit-trail requirements essential for the financial sector.

The Impact

- [*]Risk Mitigation: Enhanced the security of asset transportation by providing 100% transparency in the tracking process.

- [*]Operational Optimization: Reduced manual reporting errors through automated tracking and field-device integration.

Implementation

CCO-Track And Trace is implemented as a Cordova app for Android phone and tablet devices using SpongyCastle/BouncyCastle for strong encryption/decryption of data transfers.

Runtime environment

- [*]Android

- [*]Phone

- [*]Tablet

- [*]Barcode Scanner

Development stack

- [*]JavaScript

- [*]Java SE

- [*]Cordova

- [*]JBoss

- [*]BouncyCastle

- [*]SpongyCastle

- [*]OpenSSL

- [*]Git

- [*]Subversion

Omar / AJ Management Consulting, MBC Group / 2012

Role

- [*]Lead Architect

- [*]Senior Software Engineer

- [*]Mobile Engineer

- [*]Senior Full-stack Developer

- [*]Technical Consultant

Sector

- [*]Media

- [*]Digital Broadcasting

Task

Design and development of a visually appealing companion web and mobile app for MBC Group's TV series Omar.

In 2012 MBC Group decided to multiply the viewing experience for the biggest production in Arab entertainment history by launching a companion web and mobile app for the TV series.

The production of the series Omar is the largest TV production in the history of Arab television to date and comes in several languages. With the TV series, comes this app to complete this initiative and provide value-add content to viewers around the World via their mobile devices.

The Omar application is a nicely visualized interactive timeline (supporting parallax scrolling) that covers the history of Islam and Second Caliph of Islam Omar Bin Al-Kattab through events. The TV series is going to cover around 300 events that happened during the life of Omar from social events to battles and political transformations. The application extends the series content and covers around 1000 events in that era in details.

The application gives users the option to navigate content through different views; Timeline view, List view, Map view, and 3D perspective view, and Episodes view. The second screen experience is mainly delivered through the episodes view where users can watch the full episodes after it runs on TV, read the synopsis of the episode, and learn more information about the events that a specific episode covers.

One great feature to highlight is that for the first time an application covers all events in history on a map. It shows where each of these events took place using Google Map Coordinates which requires a lot of research and editorial efforts.

The application allows users to choose between Gregorian and Hijri calendars to navigate the timeline. This feature can help them put the events Hijri dates in context with the Gregorian dates.

The application features different social actions like sharing an event to social networks, check-in into a location of an event on the map, and the ability to go through the social stream of the series on X (Twitter).

The Challenge

The objective was to build a comprehensive digital suite — comprising native Android and iOS applications alongside a robust web-based platform—designed to streamline content workflows and asset accessibility within the ecosystem.

This project involved managing and delivering a specific suite of applications focused on accomponying the corresponding TV series, integrating a visually appealing 3D timeline, an enhanced map and extensive social integration.

The Solution

- [*]Multi-Platform Engineering: Developed native-quality mobile applications for Android and iOS and a synchronized web application to ensure seamless cross-device functionality.

- [*]API Integration & Orchestration: Designed and implemented several complex APIs to handle real-time content delivery, metadata synchronization, and secure user authentication.

- [*]Data Flow Optimization: Built a resilient data layer capable of handling the high-volume traffic and large-scale asset libraries inherent to a major media conglomerate.

- [*]Technical Quality Standards: Prioritized a clean, testable codebase to ensure the apps remained maintainable as the digital ecosystem continued to evolve.

The Impact

- [*]Unified Ecosystem: Successfully delivered a synchronized multi-platform experience, allowing internal and external stakeholders to access critical assets regardless of device.

- [*]Architectural Scalability: The API-driven approach provided a future-proof foundation, enabling the client to integrate new services and features without disrupting the existing infrastructure.

- [*]Process Speed: Improved internal content management speeds, directly impacting the efficiency of the media delivery pipeline.

Implementation

Architectural Strategy: The core of the Omar project was the strategic use of a unified technology stack to bridge the gap between web and mobile ecosystems. By leveraging a single codebase, I ensured perfect synergy between the desktop and mobile versions of the application. Key Implementation Details were as follows

- [*]Single-Source Efficiency: Built using a modern HTML5, CSS3, and JavaScript stack, allowing for rapid cross-platform deployment without duplicating business logic.

- [*]Hybrid Mobile Engineering: Utilized Cordova to wrap web technologies into native-performance containers for iOS and Android, ensuring high-speed delivery to mobile users.

- [*]Cross-Platform Synergy: By using a shared JavaScript development stack, I guaranteed that updates, security patches, and feature enhancements were synchronized across the web and mobile apps simultaneously.

- [*]API-Driven Responsiveness: Developed a flexible frontend that communicated with multiple backend APIs, maintaining high performance and data integrity regardless of the user's device.

Runtime environment

- [*]Android

- [*]iOS

- [*]Browser

- [*]Phone

- [*]Tablet

- [*]Desktop

Development stack

- [*]JavaScript

- [*]Java SE

- [*]ObjectiveC

- [*]Cordova

- [*]Git

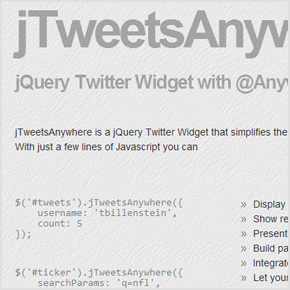

jTweetsAnywhere / thomasbillenstein.com / 2010 - 2012

Role

- [*]Project Author

- [*]Lead Architect

Sector

- [*]Developer Tools

- [*]Social Media Infrastructure

The Challenge (Open Source Initiative)

During the transition to Twitter’s @Anywhere platform, web developers lacked a robust, standardized way to integrate complex social interactions. I identified a gap in the ecosystem for a modular, highly configurable tool that could handle authentication, data streaming, and UI rendering in a single, lightweight package.

jTweetsAnywhere is a comprehensive jQuery Twitter Widget that simplifies the integration of Twitter services. It was designed to provide developers with a powerful, easy-to-use interface for the @Anywhere platform.

The Solution (The Engineering Shift)

I designed the framework to be more than just a widget; it was an extensible Open Source plugin that set a standard for social media integration tools at the time.

- [*]Architecture for Extensibility: Engineered a modular plugin architecture that allowed developers to toggle features — such as "Follow," "Tweetbox," and "Retweet"—via a clean, declarative API.

- [*]Community-Driven Quality: As an open-source project, I maintained a rigorous standard for Clean Code and documentation, ensuring the library was stable enough for production use on thousands of websites.

- [*]Complex State Management: Orchestrated real-time interactions and OAuth flows behind a simplified developer interface, hiding the complexity of the Twitter API.

- [*]Cross-Browser Reliability: Ensured the framework performed consistently across all major browsers, a critical requirement for a public-facing developer tool.

- [*]

The Impact (Contribution to the Community)

- [*]Widespread Adoption: The project became a go-to resource for the web development community, widely cited in technical tutorials and utilized in diverse commercial and personal projects.

- [*]Developer Empowerment: By abstracting the complexity of the Twitter @Anywhere API, I enabled developers to implement professional-grade social features in a fraction of the usual development time.

- [*]Proof of Craftsmanship: The project serves as a public testament to my commitment to Maintainable Code and architectural clarity—values I bring to every private client engagement.

Runtime environment

- [*]Browser

Development stack

- [*]JavaScript

- [*]jQuery

- [*]Twitter API

- [*]Anywhere API

- [*]Git

JBF / Fiducia & GAD (Atruvia) / 2009 - 2014

Role

- [*]Senior Java Framework Engineer

Sector

- [*]FinTech

- [*]Banking Infrastructure

The Challenge

Directly supporting the evolution and stabilization of the central Java Based Banking Framework (JBF). As the foundational layer for the Bankarbeitsplatz (BAP) ecosystem, the framework required constant modernization to support new business requirements while ensuring zero-downtime reliability for enterprise banking operations.

Key Contributions

- [*]Modular Architecture: Designed and implemented core packages and modules to extend the framework’s functional reach across the enterprise.

- [*]Strategic Refactoring: Executed a systematic redesign of critical legacy components, significantly reducing technical debt and improving system maintainability.

- [*]Performance Engineering: Optimized core classes and data handling components to ensure the framework could handle increasing loads and modern security demands.

- [*]Ecosystem Stability: Provided deep-level technical support to ensure that all subprojects — including "Kreditsicherheiten" — maintained architectural consistency with the core JBF.

Runtime environment

- [*]Windows 7/XP

Development stack

- [*]Java EE

- [*]Swing

- [*]Tomcat

- [*]Subversion

Configurations Editor / BMW, Cirquent (NTT DATA) / 2008 - 2009

Role

- [*]Senior Java Component Engineer

Sector

- [*]Logistics

- [*]Enterprise Infrastructure

The Challenge

Designing a specialized XML-based configuration editor to manage highly complex software logistics entities. The primary challenge was to create a tool that could handle deeply nested, interdependent data structures while ensuring strict validation and preventing configuration errors in high-stakes logistics environments.

Key Contributions

- [*]Component Engineering: Designed and implemented modular editor components specifically tailored for the manipulation of complex logistics data models.

- [*]Data Integrity: Developed robust logic for XML-based configuration management, ensuring that changes to logistics entities remained consistent and error-free.

- [*]Architectural Flexibility: Built extensible editor parts that allow for the seamless addition of new logistics entities and rules as system requirements evolve.

- [*]User-Centric Logic: Focused on streamlining the "Developer Experience" (DX) for internal teams, making the management of large-scale configuration files more intuitive and reliable.

Runtime environment

- [*]Windows XP

Development stack

- [*]Java SE

- [*]Swing

- [*]CUF

- [*]Tomcat

- [*]Subversion

Intellegio / UP-MED / 2007 - 2008

Role

- [*]Senior Systems & UI Engineer

Sector

- [*]Medical Engineering

- [*]Real-time Monitoring

The Challenge

The (re)implementation and functional extension of a specialized, tablet-based bedside monitor. The goal was to provide high-fidelity, real-time graphical presentation of critical physiological data — such as intracranial pressure and temperature — within high-stakes clinical environments.

Key Contributions

- [*]Real-time Data Visualization: Engineered high-performance graphical components to render high-frequency sensor data with zero latency, ensuring medical professionals have an instantaneous view of vital changes.

- [*]System Modernization: Refactored the core application logic to optimize performance on mobile/tablet hardware while maintaining strict medical-grade data integrity.

- [*]Multi-Sensor Integration: Extended the monitoring framework to support a wider array of telemetry inputs, enabling a more comprehensive diagnostic view at the point of care.

- [*]Reliability Standards: Focused on implementing "Zero-Failure" software patterns, essential for devices used in critical patient monitoring.

Runtime environment

- [*]Linux (Ubuntu)

- [*]LFS (Linux from Scratch)

- [*]TCP

- [*]UDP

- [*]IP

- [*]SQLite

Development stack

- [*]C++

- [*]Python

- [*]wxWidgets

- [*]wxSQLite3

- [*]Subversion

magoona.de / thomasbillenstein.com / 2007

Role

- [*]Lead Full-stack Architect

- [*]Product Lead

Sector

- [*]Consumer Internet

- [*]Web 2.0

The Challenge

The end-to-end development, launch, and live operation of a highly interactive, personalized portal. The goal was to redefine the browser experience by creating a "webDesktop"—a centralized hub for productivity, entertainment, and community features that felt as responsive as a local operating system.

Building a "WebDesktop" (often called a Rich Internet Application or RIA) during the Web 2.0 era required deep knowledge of state management and asynchronous communication long before modern frameworks like React existed.

Key Contributions

- [*]End-to-End Lifecycle: Managed the entire product journey, from initial architectural design and implementation to global launch and day-to-day operations.

- [*]Advanced AJAX Architecture: Engineered a sophisticated, asynchronous UI framework to support a stateful, personalized desktop environment within the browser.

- [*]Integrated Productivity Suite: Developed a modular array of productivity and entertainment tools, ensuring seamless data synchronization and user-specific configurations.

- [*]Community & Social Logic: Implemented robust community support systems, fostering user interaction and engagement within a secure, scalable web environment.

Runtime environment

- [*]Linux (SuSE)

- [*]Windows XP

- [*]XAMPP

- [*]LAMP

- [*]MySQL 5

Development stack

- [*]PHP5

- [*]JavaScript

- [*]Script.aculo.us JavaScript Library

- [*]Prototype JavaScript Library

- [*]Smarty Template Engine

- [*]Subversion

SA3 / BMW, Softlab (NTT DATA) / 2006 - 2007

Role

- [*]Senior Architect

- [*]Full-stack Engineer

Sector

- [*]Automotive

- [*]Enterprise Commerce

The Challenge

The internationalization and functional expansion of the SA3 Automobile Sales System for the European market. The project required transforming a localized system into a multi-jurisdictional platform capable of handling diverse tax structures, complex discount variants, and cross-border car configuration rules while maintaining seamless contract lifecycle management.

In the automotive world, moving a sales system from a single market to a European-wide rollout (SA3) involves massive logical challenges—handling cross-border tax laws, regional pricing variations, and deep legacy integrations.

Key Contributions

- [*]Internationalization (i18n) & Localization: Engineered the core logic to support European-wide market requirements, specifically focusing on dynamic tax calculation engines and regional discount frameworks.

- [*]Full Sales-Cycle Engineering: Extended and refined the system’s capabilities for real-time car configuration, automated quotation generation, and end-to-end contract management.

- [*]Systems Integration: Designed and implemented high-integrity interfaces to synchronize the sales platform with existing enterprise back-office applications and logistics databases.

- [*]Business Logic Optimization: Refactored complex ordering workflows to ensure data consistency from the initial customer quote through to final contract execution across multiple regional markets.

Runtime environment

- [*]Windows XP

- [*]Solaris

- [*]Linux

- [*]BEA WebLogic

- [*]Oracle

Development stack

- [*]Java (J2EE, J2SE)

- [*]Swing

- [*]Subversion

BI / O2 / 2006

Role

- [*]Senior Full-stack Developer

- [*]BI Systems Engineer

Sector

- [*]Business Intelligence

- [*]Marketing Technology

The Challenge

Developing a centralized administration platform to empower the Business Intelligence Center in managing high-volume customer campaigns. The objective was to build a bridge between complex backend data analytics and operational campaign execution, requiring a tool that combined sophisticated configuration logic with intuitive reporting.

Key Contributions

- [*]Campaign Lifecycle Management: Engineered the core administrative framework for the end-to-end management of customer campaigns, from initial rule-based configuration to final execution.

- [*]BI Data Integration: Developed robust interfaces to the Business Intelligence backend, ensuring seamless data flow between customer segmentation models and the campaign tool.

- [*]Analytics & Reporting Engine: Designed and implemented a reporting module to visualize campaign metrics and performance, providing stakeholders with real-time insights for data-driven decision-making.

- [*]Operational Efficiency: Streamlined the workflow for campaign managers by automating complex configuration tasks, significantly reducing the "time-to-market" for new customer outreach initiatives.

Runtime environment

- [*]Windows 2000

- [*]Oracle

Development stack

- [*]Java (J2EE, J2SE)

- [*]JavaScript

- [*]Spring

- [*]JSP

- [*]Subversion

QA / Fiducia & GAD (Atruvia) / 2006

Role

- [*]Senior Systems Integrity Egineer

- [*]QA Automation Engineer

Sector

- [*]FinTech

- [*]Banking Infrastructure

The Challenge

Ensuring the flawless rollout of a large-scale banking self-service platform. This involved securing the stability of the software across a diverse fleet of hardware, managing complex defect remediation cycles, and automating the deployment and testing processes to ensure high-velocity, low-risk releases.

Key Contributions

- [*]Systems Integrity & Bugfixing: Conducted deep-level defect analysis and provided expert support for critical bugfixing, ensuring the platform met the uncompromising reliability standards of the financial industry.

- [*]Automated Testing Frameworks: Designed and implemented custom tools and programs to automate test execution and result analysis, transforming manual QA into a scalable, repeatable process.

- [*]Deployment Automation: Engineered specialized tools to automate the software installation and configuration process for banking self-service devices, significantly reducing rollout time and human error.

- [*]Advanced Test Strategy: Developed and executed complex test cases, ranging from standard operational flows to specialized "edge-case" scenarios required for secure financial transactions.

Runtime environment

- [*]Windows XP/NT

Development stack

- [*]Java (J2EE, J2SE)

- [*]C++

- [*]Perl

OPT / Stadtsparkasse München / 2005

Role

- [*]Senior Security Systems Engineer

Sector

- [*]FinTech

- [*]Banking Cryptography

The Challenge

The primary objective was the implementation of OPT functionality to facilitate the secure online contribution of cryptographic keys into Terminal-HSMs. This required architecting a high-security bridge between banking self-service devices and ZKA-certified external providers, ensuring that the injection of sensitive keys followed strict, audit-ready procedures.

Implementing OPT (Online Personalization of Terminals) is a critical task that ensures the cryptographic integrity of banking hardware, directly preventing fraud and ensuring regulatory compliance.

Key Contributions

- [*]Hardware-Level Security: Extended the system interface to the Encrypted Pin Pad (EPP), enabling secure hardware-to-software communication for cryptographic operations.

- [*]Protocol Engineering: Engineered the logic for the generation and validation of ISO data records, ensuring that all transactional and personalization data adhered to international banking communication standards.

- [*]HOST Communication Enhancement: Refactored and enhanced the HOST communication software modules to support the robust exchange of ISO data records between terminals and central banking servers.

- [*]Cryptographic Compliance: Managed the integration of secure key-exchange methods, ensuring the personalization process met the rigorous requirements of external certification bodies (ZKA).

Runtime environment

- [*]Windows XP/2000

- [*]WebSphere MQ

Development stack

- [*]Java (J2SE)

- [*]J/XFS

- [*]CSCW32

- [*]OpenSSL

- [*]Subversion

- [*]CVS

cheRSS / objectware / 2004

Role

- [*]Systems Architect

- [*]Lead Mobile Developer

Sector

- [*]Mobile Technology

- [*]Information Systems

The Challenge

Developing a high-performance news aggregator tailored for the first generation of smartphones and PDAs. The primary challenge was the efficient parsing and synchronization of heterogeneous data feeds (RSS, RDF, and ATOM) over limited-bandwidth connections and on devices with restricted processing power and screen real estate.

Key Contributions

- [*]Multi-Protocol Engine: Engineered a robust parser capable of handling various XML-based standards (RSS 0.9x/1.0/2.0, RDF, and ATOM), ensuring compatibility across a wide range of global news sources.

- [*]Resource-Optimized Sync: Developed a lightweight synchronization logic designed to minimize battery consumption and data usage, critical for early mobile hardware.

- [*]Adaptive Mobile UI: Designed and implemented a responsive interface optimized for small-form-factor devices, prioritizing readability and intuitive navigation for text-heavy content.

- [*]Local Data Management: Built an efficient caching layer to allow for offline reading, a pioneering feature for mobile data applications at the time.

Runtime environment

- [*]Windows XP

- [*]Nokia Mobile

Development stack

- [*]Java (J2SME)

- [*]MIDP

- [*]CLDC

- [*]Sun Wireless Toolkit

- [*]Nokia Developers Suite

- [*]Motorola J2ME SDK

- [*]Subversion

Banking Self-Service Devices / Stadtsparkasse München, Wincor Nixdorf / 2003

Role

- [*]Senior Migration Architect

- [*]Java Engineer

Sector

- [*]FinTech

- [*]Infrastructure Modernization

The Challenge

Strategic porting of the "Banking Self-Service" application suite to the Wincor Nixdorf hardware platform. This was not a simple port, but a fundamental architectural shift: migrating from a legacy environment to Windows XP/2000 and transitioning the entire communication stack to meet modern enterprise standards.

Set up the ability to transition legacy systems into modern environments, specifically shifting from proprietary protocols (SNA) to enterprise messaging standards (MQ) and from low-level C++ to portable Java.

Key Contributions

- [*]Programming Language Transformation: Executed a comprehensive rewrite of critical system modules, migrating low-level C/C++ logic into a robust Java-based architecture to improve maintainability and cross-platform compatibility.

- [*]Middleware Re-Engineering: Spearheaded the transition of the entire HOST communication layer, replacing the legacy SNA protocol with WebSphere MQ (IBM MQ) for high-reliability asynchronous messaging.

- [*]Platform Adaptation: Integrated the application suite with the Wincor Nixdorf hardware environment, ensuring seamless interaction between the new Java-based logic and the underlying Windows-based OS.

- [*]Architectural Decoupling: Leveraged MQ-based messaging to decouple the terminal applications from the mainframe, significantly increasing system resilience and scalability.

Runtime environment

- [*]Windows XP/2000

- [*]WebSphere MQ

Development stack

- [*]Java (J2SE)

- [*]J/XFS

- [*]CVS

CIM86 / Gunnebo Deutschland / 2003

Role

- [*]Senior Embedded Systems Developer

Sector

- [*]FinTech

- [*]Hardware Security

The Challenge

Developing a high-performance C++ communication library to interface with CIM86 hardware units via the RS232 serial protocol. The CIM86 box serves as a specialized security module for the authentication of EC (Electronic Cash) cards, requiring a communication layer that is both extremely reliable and strictly timed to handle sensitive financial credentials.

Key Contributions

- [*]Low-Level Protocol Implementation: Engineered a robust C++ library to manage serial communication (RS232), handling bit-level data exchange and signal synchronization with the CIM86 hardware.

- [*]Secure Authentication Logic: Implemented the driver-level logic required for the secure processing of EC card data, ensuring integrity during the authentication handshake.

- [*]Resource Efficiency: Designed the library with a focus on high-performance memory management and deterministic execution, essential for hardware-near financial applications.

- [*]Hardware Abstraction: Provided a clean, high-level API for application developers, abstracting the complexities of the serial protocol and hardware state-management.

Runtime environment

- [*]Windows XP/NT

Development stack

- [*]Visual C++

HOST communication / Reis Eurosystems / 2003

Role

- [*]Senior Protocol Architect

- [*]C++ Engineer

Sector

- [*]FinTech

- [*]Banking Infrastructure

The Challenge

Engineering a high-integrity C++ communication library to bridge physical coin deposit machines with central mainframe systems. The primary technical hurdle was the implementation of the SNA LU0 (Systems Network Architecture) protocol, a low-level IBM networking standard required for secure, direct communication with legacy banking mainframes.

Interfacing physical hardware with mainframe architectures using SNA LU0 requires a rare level of technical depth, particularly in how data is packetized and transmitted across high-security financial networks.

Key Contributions

- [*]Protocol Stack Development: Successfully implemented the complex SNA LU0 protocol in C++, enabling structured data exchange between distributed edge hardware and the central host.

- [*]Mainframe Interoperability: Engineered the translation layer required to convert real-time hardware events into mainframe-compatible data formats, ensuring 100% accuracy in financial auditing.

- [*]Deterministic Communication: Developed robust error-handling and recovery routines for the serial/network bridge, critical for maintaining transaction integrity in automated banking environments.

- [*]Low-Level Optimization: Optimized the C++ library for high reliability and low latency, ensuring the physical hardware-to-mainframe handshake remained stable under heavy operational loads.

Runtime environment

- [*]Windows 2000/NT

- [*]IBM Communication Server

- [*]IBM Personal Communications

- [*]SNA

- [*]Ethernet

- [*]TokenRing

Development stack

- [*]Visual C++

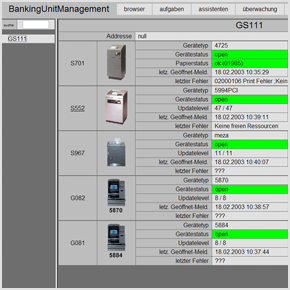

Banking Unit Management / Stadtsparkasse München, IBM, NCR, Wincor Nixdorf / 2000 - 2003

Role

- [*]Lead Systems Architect

- [*]Full-stack Developer

Sector

- [*]FinTech

- [*]Enterprise Remote Management

The Challenge

Designing and implementing an end-to-end intranet solution for the remote monitoring and administration of a global fleet of banking self-service devices. The goal was to provide real-time visibility into device health and the ability to execute remote commands across a secure, distributed network.

Key Contributions

- [*]Distributed Architecture: Engineered a tiered management ecosystem consisting of a lightweight SBM Agent for edge devices, a high-throughput SBM Server, and a responsive Web Application.

- [*]Real-time Monitoring & Event Handling: Developed the server-side logic to asynchronously collect state information and process critical events from thousands of remote agents simultaneously.

- [*]Command Orchestration: Implemented a secure delegation framework, allowing administrators to push remote commands and software updates to devices with guaranteed execution and logging.

- [*]Unified Administrative Interface: Built a centralized web-based dashboard that provided a high-level overview of the device fleet, enabling rapid incident response and remote maintenance.

Runtime environment

- [*]Windows XP

- [*]Linux

- [*]TCP/IP

- [*]Ethernet

- [*]TokenRing

Development stack

- [*]Java (J2SE, J2EE)

- [*]JavaScript

- [*]C/C++

- [*]JSP

- [*]CVS

Banking Self-Service Devices / Stadtsparkasse München, IBM / 1996 - 2000

Role

- [*]Senior Systems Architect

- [*]Lead Developer

Sector

- [*]FinTech

- [*]Banking Hardware & Services

The Challenge

Developing a comprehensive, multi-component application for a diverse fleet of IBM banking self-service devices (Account Statement Printers and Service Terminals). The goal was to build a unified, high-availability system that could handle everything from complex financial transactions to direct hardware control and secure mainframe communication.

The most interesting aspect of the challenge was to provide the ability to write everything from the high-level Java business logic down to the C/C++ device drivers that control physical hardware like magnetic stripe readers and encrypted pin pads.

Key Contributions

- [*]Multilingual Software Stack: Engineered a hybrid solution using Java for the application layer and C/C++ for low-level system services and device drivers, ensuring a balance between business logic agility and hardware performance.

- [*]Full-Spectrum Financial Services: Implemented a robust suite of banking features, including automated payment transactions (transfers, standing orders), portfolio management, and real-time account services.

- [*]Core System Infrastructure: Designed and built the "under-the-hood" services, including a custom System Kernel, Loader, and Configuration Server, as well as a high-security SNA-based HOST communication module.

- [*]Hardware Interfacing & Drivers: Authored high-precision C/C++ drivers for critical peripherals, including Magnetic Stripe Readers, Document Printers, and Encrypted PIN Pads (EPP), ensuring secure and reliable physical interactions.

Runtime environment

- [*]OS/2

- [*]SNA

- [*]TCP/IP

- [*]Ethernet

- [*]TokenRing

Development stack

- [*]Java (J2SE)

- [*]C/C++

- [*]SUN JDK 1.x

- [*]IBM C/C++

- [*]LANDP

- [*]CVS

About

- [*]I develop software.

- [*]I build systems.

- [*]I write code.

I am a freelance System Architect and Software Engineer with a career spanning over 35 years of technical evolution.

Since graduating from the Technical University of Munich ((Electrical Engineering/Data Processing)), I have navigated the shift from low-level systems to modern cloud-native architectures. This long-term perspective allows me to build software that isn't just functional today, but maintainable for the next decade.

I have had the privilege of solving complex engineering challenges for industry leaders — including IBM, NCR, O2, BMW, NTT DATA, MBC Group — bridging the gap between ambitious business goals and robust technical execution.

Whether you are modernizing a legacy system or architecting a new ecosystem from the ground up, I bring the precision of an engineer and the vision of a strategist.

Let’s build something built to last. Feel free to reach out.